Will AI Manage Tomorrow’s Cloud Infrastructure?

Vu Anh

Marketing Executive

Table of Contents

How do you measure your success when you think about your cloud journey? We’re willing to bet that efficiency and ROI are metrics that are right up there at the top. Yet despite that goal, 68% of IT decision-makers say that their cloud infrastructure has become more expensive in the past year. And to make matters worse, engineers are growing increasingly frustrated with manual cloud management and its tendency to result in mundane and inefficient operations.

Let’s face it –

no matter how many donuts you bring to soften the blow, all of this is likely to cause a bit of a downer at the next board meeting.

While the cloud may be keeping to its promise of flexibility, agility and speed to market – there’s a very literal price to be paid for the benefits, and this hasn’t gone unnoticed. According to a recent Gartner report, “The adoption of cloud computing introduces a number of new challenges, but managing cloud spending is proving to be one of the most difficult.”

How have things gotten so out of control?

Organizations often deploy cloud environments at the early stages of their business growth, with goals of scalability and speed in mind. Unfortunately, efficiency and cost margins tend to be of less of a concern during this phase and as time goes on, inaccurate predictions, overages and constantly shifting application requirements make the cloud nearly impossible to manage. This causes engineers to spend too much of their precious time on infrastructure management and less time on innovative projects that contribute to the growth and success of the organization.

Cloud Efficiency: Promise vs Reality

But wait a minute – doesn’t the cloud promise ultimate elasticity and scalability so that you can pay for exactly what you use in a granular way, and keep any extra costs at bay? And doesn’t it enable more flexibility by removing the need to buy and provision physical servers for each new customer, product, or feature?

The truth is, it does and it doesn’t.

On the one hand, right up there in bold print on everything from your EC2 instances, Azure VMs, or Google Cloud Compute Engine for compute to your EBS volumes, Azure Disks, or Persistent Disks for storage is the ability to pay for what you use. In reality though, the continuous need to uphold the shared responsibility model means engineers are stuck manually provisioning resources to meet application needs in order to ensure the product doesn’t crash, lag, or end up costing the organization a fortune.

Since no human can know what application needs are at each moment every single day, allocating resources becomes a tremendous burden that drains engineering resources. As each workload has its own requirements in terms of factors like CPU, memory, disk capacity and networking – this gets complex fast. On top of the workload needs, the infrastructure of SaaS companies directly correlates with their business needs, which makes it critical for engineers to monitor and manage it accordingly. In no time, your engineers have become the babysitters of your cloud infrastructure, constantly adapting cloud resources to try to ensure stability, optimize performance, and increase efficiency, rather than focusing on their core job – development and innovation.

With every new version released,

your engineers must measure whether the application requirements changed and revaluate the optimal mix of cloud resources. With this much at stake, it’s no wonder that it’s often easier for staff to lean into over-provisioning, which of course leads us back to that negative impact on your ROI.

And if you think this predicament is just an inconvenience for the engineering department, think again. Manual cloud management can significantly impact your growth as an organization. With hundreds of new SasS companies founded every year, today’s businesses are competing for the best engineering talent. In this cut-throat market, talented engineers will be drawn to companies that promise to put their skills to good use, enabling them to focus on building products. This means, if your engineers are spending too much time managing infrastructure rather than driving business value, you may have difficulty attracting new talent — a huge loss for the future of your business.

Enter, Artificial Intelligence (AI)

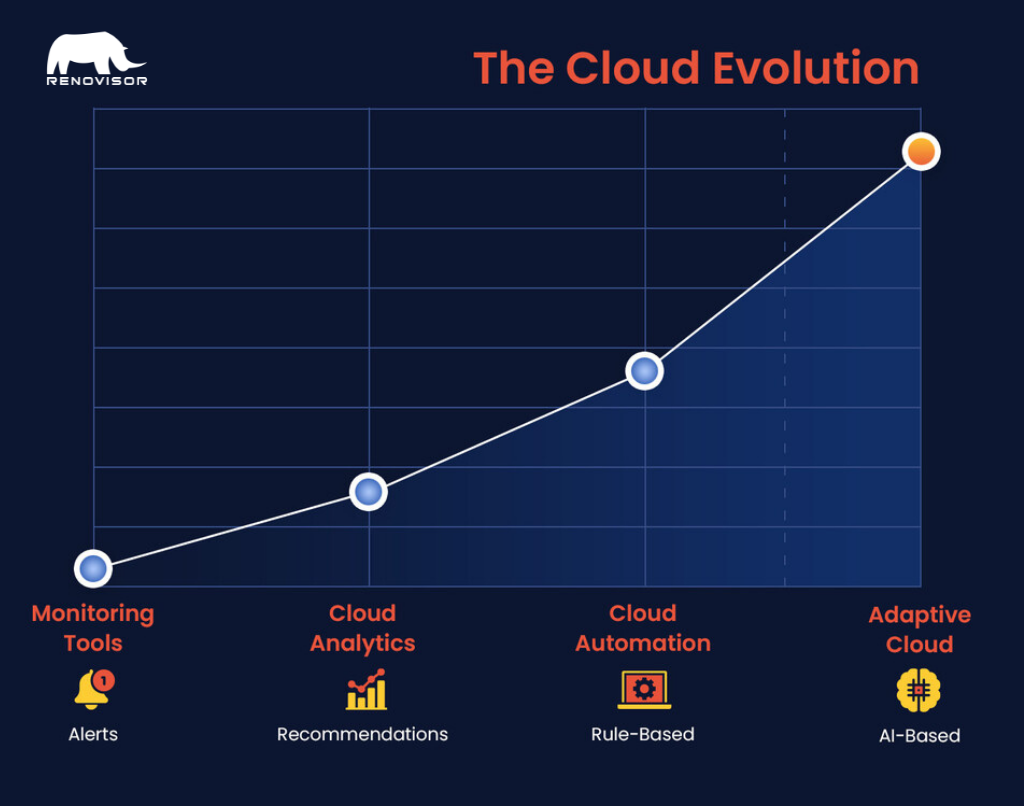

AI has already proven itself to be a transformative technology. Think about examples like anomaly detection, root cause analysis and preventive analysis for security, operations, and engineering use cases. And yet, in many ways we’ve just scratched the surface of what you can achieve with AI. The challenge above is another great example. Provisioning, monitoring and optimizing cloud resources are repetitive and identifiable events that could be performed independently and with a higher level of accuracy by leveraging AI technology.

When you put AI to work in cloud management, here are just a few examples of what you might achieve:

Always on Monitoring:

Free skilled staff up from their sentry duty by putting smart technology to work instead. Humans can only analyze so many data points without losing accuracy. In contrast, AI can process millions of real-time data sets pulled from multiple sources in seconds with 100% veracity and zero human error. As a result, you gain the ability to continuously monitor and analyze your infrastructure usage and automatically adapt cloud resources to application needs without any human intervention. Simply put, AI spots everything and never sleeps. You can’t expect that level of commitment from Kathy in Engineering without getting an official warning letter from Legal.

Real-time Reactions and granular analysis:

As your compute or disk usage scales up or down, AI can react with lightning-fast reflexes. When it comes to optimizing resources, AI can make instant adjustments to meet the current demand, saving both time and money. You haven’t dreamed up a commitment level this perfect since you were in high-school scribbling hearts on a notebook. We’re talking optimal utilization – 24/7. Furthermore, AI enables much more granularity as it can measure compute, storage, and other important resources in parallel, all in a manner of seconds.

Smart Predictive Intelligence:

Prediction algorithms can make smart decisions based on historical usage trends and real-time environmental analysis. One example is forecasting how much capacity a company will need to enable the gradual expansion of storage without impacting the ability to react in real-time when necessary. Think about a seasonal business where resources spike with specific events or triggers – AI can allow you to be ready ahead of time. Other use cases could be intelligently estimating how much storage and compute your application needs at any given moment or using Spot Instances\Reserved Instances when they suit your needs.

Transforming Yesterday’s Hopes for AI into Today’s Measurable Outcomes

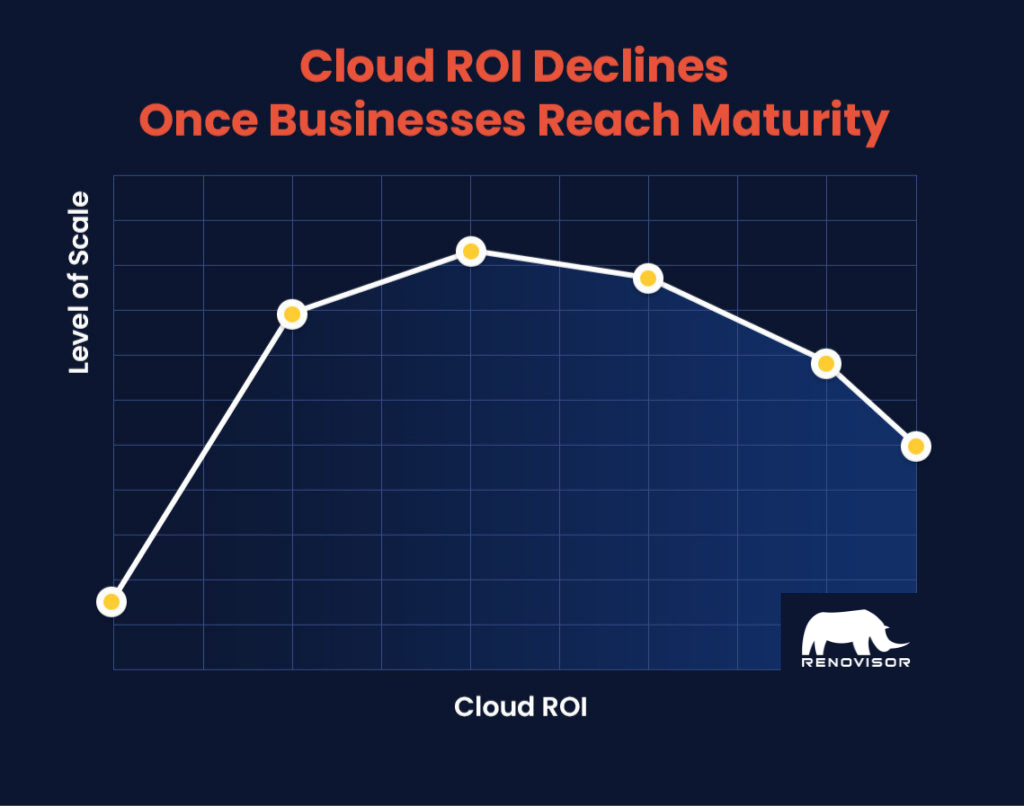

According to researchers at VC firm Anderseen Horowitz, “While cloud clearly delivers on its promise early on in a company’s journey, the pressure it puts on margins can start to outweigh the benefits, as a company scales and growth slows. Because this shift happens later in a company’s life, it is difficult to reverse as it’s a result of years of development focused on new features, and not infrastructure optimization.”

When it comes to cloud management, as environments scale and become increasingly complex, cloud expenditure and intricacies of cloud management become unmanageable. AI is a game-changer for optimizing an existing cloud infrastructure that has matured past the early adoption benefits mentioned above. By reducing costs, automatically provisioning resources, and achieving smart utilization of cloud resources, AI can take the effort off the shoulders of your engineering teams, so you can once again see the benefits of the cloud reflected smartly on both your bottom line and on the relieved and happy faces of your engineering team.