Above all Clouds – Orchestrating and Managing Kubernetes Across Public Cloud and On-Premises

Samira Kabbour

CMO

Driven by the laws of physics, economics, and land, the future of enterprise computing will head towards a multi-cloud era, predicted by VMware CEO Pat Gelsinger

As enterprises increase their cloud adoption, we are seeing them using multiple cloud providers leading to hybrid cloud and multi-cloud deployments. According to a study by 451 Research, a third of all the organizations use four or more cloud vendors. Other surveys by research organizations and vendors also highlight this trend towards a multi-cloud future. The hybrid and multi-cloud trend is driven by the varying needs of enterprises including

- Allowing on-premises applications to work with cloud-based applications

- Empowering their developers to use the right cloud service/platform for their application needs without being constrained by cloud providers

- Meeting the geographic needs for performance guarantees

- Compliance with data privacy regulations

Multi-cloud is increasingly seen by IT leaders as the underlying foundation for rapid innovation, most often within the context of a hybrid environment. However, both hybrid-cloud and multi-cloud presents challenges for organizations. In this post, we will briefly discuss these challenges and highlight how Kubernetes helps them mitigate these challenges.

Compute

Hybrid or multi-cloud environments pose unique resource management and efficiency problems. There will be heterogeneity in the underlying physical servers as well as virtual machine sizes. Capacity planning is always a hard problem with such heterogeneous environments. Enterprise users are faced with confusion during provisioning because it is very rare for cloud providers to allow custom sizing. Now if you include the use of different possible pricing models like Reserved and Spot instances for capacity planning, it complicates resource provisioning, resulting in suboptimal resource allocation and usage.

Scaling presents another problem with hybrid and multi-cloud environments. While On-Premises environments have infrastructure limitations in scaling, different cloud providers have their own ways of scaling the infrastructure. Managing the application to scale in such heterogeneous environments is no easy task especially when both cost and performance are equally important.

Managing the infrastructure underneath

With containers and Kubernetes, users can pick the Pod size that fits the application needs without worrying about the vagaries of virtual machine size underneath. There are many options to build the underlying infrastructure for Kubernetes. While most users rely on On-Demand instances (where costs are very high) or Reserved instances (where costs are low but capacity planning becomes critical), there is a better approach to ensure resource efficiencies with up to 80% in cost savings. If Spot instances are effectively used, these cost savings can be realized. However, Spot instances are short-lived and can be recalled by the cloud provider anytime.

However, with the right analytics and automation, users could take advantage of Spot, Reserved and On-Demand instances to realize cost savings, however, we will cover that in another/our next blog. Kubernetes abstracts away the complexity involved in managing the virtualized infrastructure and, with device plugin, it is easy to use specialized instances like GPU.

With the right infrastructure management layer underneath, Kubernetes can be used to make scaling of applications seamless. Kubernetes uses a declarative model that allows developers to describe their desired state in a YAML file and Kubernetes handles the scaling seamlessly without any human intervention.

Kubernetes gives a more application-centric view of the compute infrastructure, leading to more efficient use of resources.

Networking

With hybrid cloud environments, enterprises are faced with the operational complexity of managing the On-Premises networking (which by itself is a complex task) with that of the cloud. Each cloud provider has its own networking and security policies which makes it very difficult to run applications on top of heterogeneous environments like hybrid or multi-cloud. Multi-tenancy is another big problem that adds additional operational overhead in hybrid or multi-cloud environments. The network security becomes way too complex to handle as the network perimeter transcends multiple cloud providers and On-Premises environment.

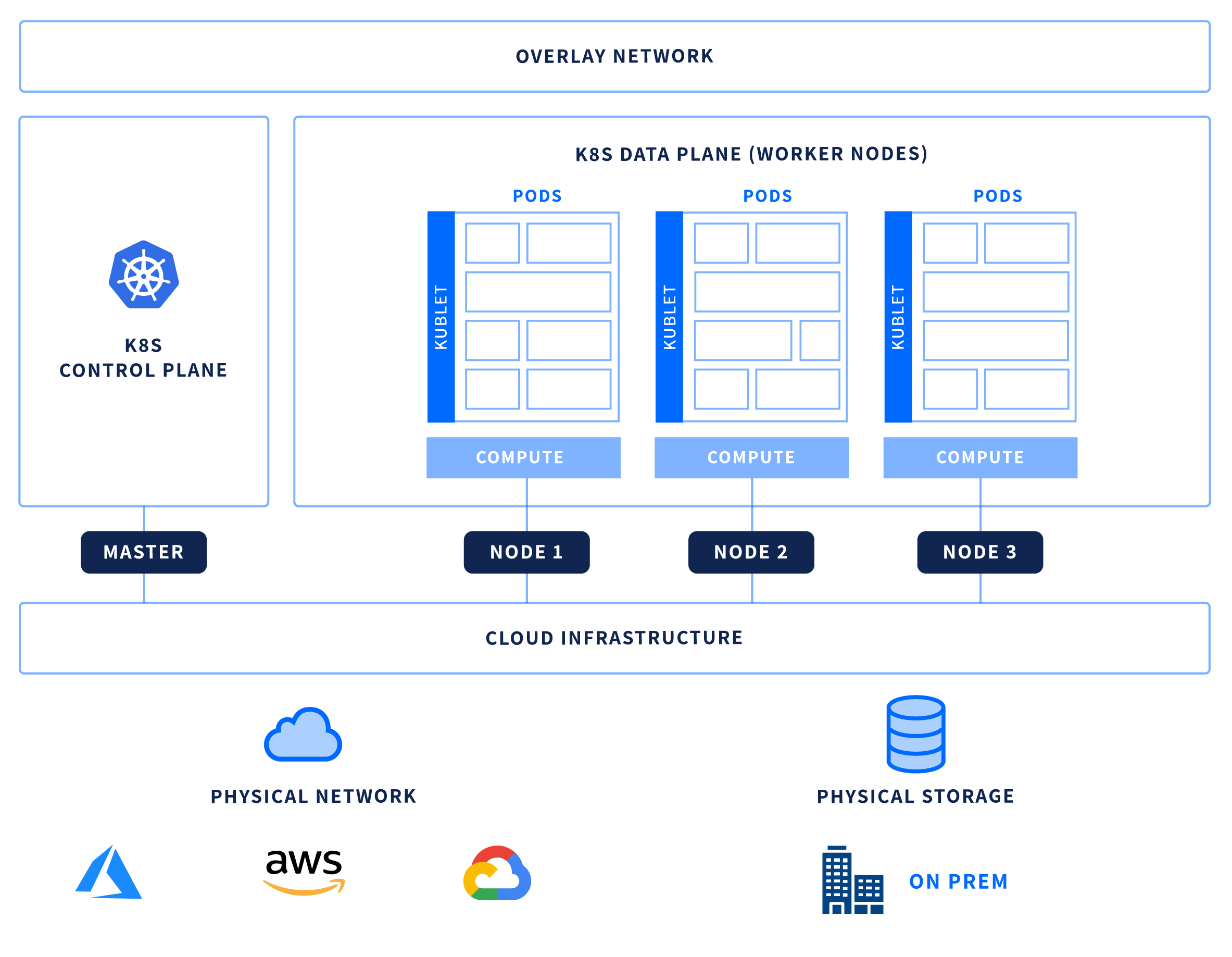

Kubernetes abstracts away most of these complexities and adds a programmatic layer to networking. By using Common Network Interface (CNI), an API that abstracts away all the complexities associated with managing various networking tools, applications need not worry about the underlying networking complexity. Multi-tenancy is built into Kubernetes, making it easy to isolate various applications and workloads. Kubernetes provides the necessary controls to handle network security policies. With Kubernetes, developers can deploy applications without worrying about the plumbing underneath and CNI takes away any need to learn about specific networking technologies.

Kubernetes offer support for Network Policies which lets users specify how a group of pods communicate with each other and outside the clusters. This provides layer 3 level segmentation of the network. Unlike the Docker’s approach of using port forwarding from the host to the underlying container, Kubernetes uses a different approach imposing requirements for network implementation. Kubernetes Network Policies controls the communication between the Pods. Kubernetes also provides CNIs as a way for the clusters to integrate with the underlying network infrastructure including Overlay Networks. Most network providers and cloud providers offer CNI making it easy to take advantage of fine-grained controls like AWS security groups. Cloud providers like AWS and Azure allow security groups along with the Network Policy to microsegment the network. Thus, Kubernetes offers flexibility to finetune the network to work on any cloud or On-Premises environment.

Kubernetes also supports various Servicemesh technologies like Istio, Linkerd, etc. to extend the network security to Microservices running on top of the platform. Along with features like service discovery, traffic management, policy enforcement, etc., Servicemesh allows a way to verify the identity of Microservices and authenticate them for inter-service communication. This allows users to use Kubernetes across multi-cloud or hybrid cloud environments without worrying much about running the Microservices across networks with varying degrees of trustworthiness.

Kubernetes also handles encryption seamlessly by demanding that all the communication with the Kubernetes API to be encrypted, thereby, reducing the operational overhead to manage additional security needed for running clusters across multi\ cloud and hybrid environments.

Storage

Storage is another important challenge in hybrid and multi-cloud environments. Every cloud provider has its own storage services available with a diverse set of APIs. Most On-Premises workloads have existing storage solutions which organizations want to use. Running your applications in such a heterogeneous environment is very difficult and even with software-defined storage solutions, it is not easy to manage. Kubernetes takes the pain out of running applications in such heterogeneous environments by providing an abstraction called Persistent Volumes that holds the data persisted by stateful applications. This API allows applications to provision and use many types of storage used in hybrid and multi-cloud environments without any additional operational overhead.

As pointed out by VMware CEO Pat Gelsinger, the laws of Physics will require that workloads are distributed across cloud providers and On-Premises to give a user experience that meets the needs of modern enterprise. Kubernetes provides the abstraction that can help organizations run the workloads based on data locality, in order to provide a superior user experience. This fact is also highlighted by the direction taken by NetApp Kubernetes Service (NKS) which provides seamless storage management across multiple cloud providers like AWS, Azure and Google Cloud. The support for On-Premises is expected in the future. Kubernetes is turning out to be the abstraction that makes multi-cloud and hybrid cloud scenarios a reality.

Power of Kubernetes across Clouds

Kubernetes is built to automate the complexity out of heterogeneous environments and provide a standardized way to encapsulate the workloads across multiple cloud providers and On-Premises infrastructure. With a declarative approach, Kubernetes takes the pain out of configuring the right automation to meet the workload needs. Rather, it provides the necessary hooks that makes application deployment in the hybrid and multi-cloud environments seamless. The following table explains the various hooks in Kubernetes that provide the necessary automation for seamless application deployment in heterogeneous environments.

Kubernetes brings in large scale automation across heterogeneous environments without having to deal with the complexities associated with managing them. This level of abstraction, along with the declarative approach to orchestration and management, makes Kubernetes the right abstraction for hybrid and multi-cloud environments. This introduces the concept of application portability as enterprises embrace cloud providers, a major concern for them and one that Kubernetes is uniquely positioned to address.

For customer examples, see XYZ video or ABC blog post on our site. In our next / this other blog we cover combining the power of Kubernetes with our Spotinst solutions, and go deeper on the benefits this brings to organizations like yours.

Source: Spotinst (Kevin McGrath, VP of Architecture)